ML text summarization reliability

Comparison of OpenAI's ChatGPT, Microsoft's Bing, Kagi's Universal Summarizer, Google's Bard and text summarization services' reliability

UPDATE 2023-03-25: added Google Bard, which was released the day after this article; updated the conclusion.

intro

Nowadays, one cannot write about Large Language Models (LLMs) or Natural Language Processing (NLP) without mentioning ChatGPT. "It will change the world", "it will make everything easy", "people will lose jobs because of it", blah blah... I'm neither impressed nor so trusting in its ability to take over the world, just yet.

In January, Microsoft has re-funded OpenAI with $10 billion. Since around that time, ChatGPT's responses quality subjectively worsened. Writing about how ChatGPT made a mistake feels like kicking someone who

The world was in awe during the tech preview of OpenAI's ChatGPT. Then the bot got incorporated into Microsoft's Bing search engine. Google's CEO responded by showing a half-baked solution called Bard, which prompted his own employess to publicly refer to exec's move as a "dumpster fire of a response". Shortly after, Bing Chat started spilling out responses of also not the best quality. On March 16, 2023, Baidu presented it's LLM chatbot called Alfred. Their share's price tanked 10%.

While there are hundreds, if not more, articles praising ChatGPT published daily, the public has noticed that the "AI" (futuristic acronym for machine learning models or artificial general intelligence) not necesserily shall be trusted. And one may wonder how many of these posts are generated by ChatGPT itself.

like a politician

After sitting on notes for this post for some time, I decided that the "kicking something that is down" thinking does not apply. ChatGPT spills nonsense with too much confidence. To put it bluntly: it hallucinates and elaborately lies. As it is not a real, sentient, artificial intelligence, it likely does not understand that it is doing so. This aspect, and maybe hallucinations, differentiate the bot from a politician.

This tech is supposed to mimic human behavior. I don't trust humans who pretend to be knowledgable and state bullshit and in my opinion, ChatGPT currently deserves all the bad press it can get. Praising is not the way to improvement and this aspect of ChatGPT has to improve.

text summarization tools

I opened this blog recently and soon started testing the website against web optimization, loading speed checkers and various other tools. Not that I wanted to attract dozens of human readers per second (this is a personal tech blog after all, not a content farm), but I like things done well.

In February a paid search engine Kagi announced a tech preview of their Universal Summarizer - an ML-backed tool offering, as the name suggests, text summarization. It worked for books, culinary recipes, various pasted texts and made a good job on medical research paper abstract creation.

At a whim, I gave it an URL to one of my previous posts. It did a fairly good job on shortening my post to few phrases containing most of aspects I considered important. It pointed out something I considered less important but, well, it is in the article. Possibly some humans also found this information outstanding, so that's a lesson for me to concentrate on what's important and write on point (I'm chatty but I'll try).

Sadly, I did not took a screenshot of a response to my inquiry and soon the tech preview has ended. Planning to finally write this text, I recently reached out to Kagi, giving them an URL to the post in question and asking for a screenshot of their - no longer publicly available - summarizer's response. To my surprise, they have graciously granted me access to a non-public preview of the Universal Summarizer, which will be released on March 22, 2023.

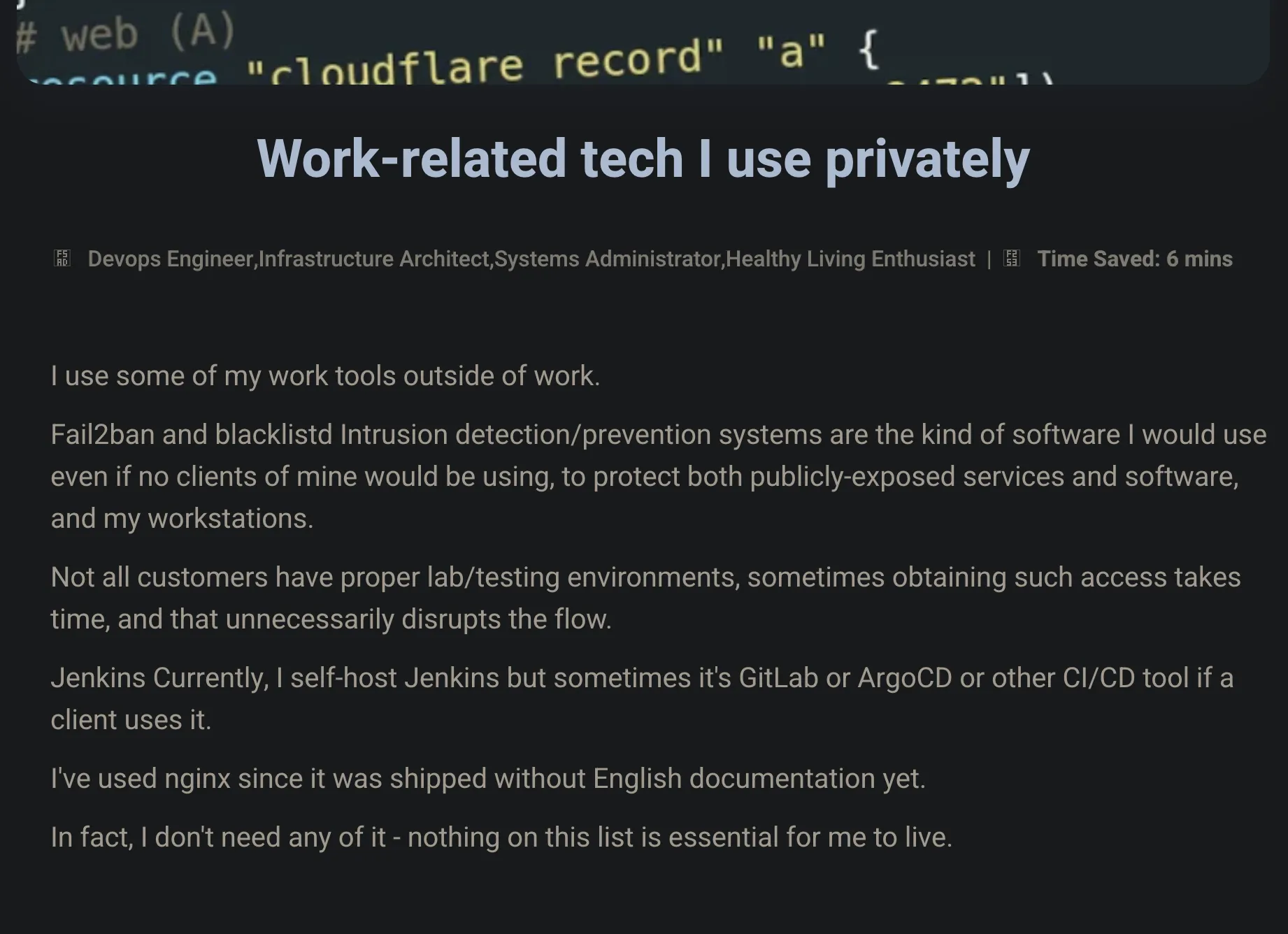

I can now back up my stataments with screenshots. I've asked various text synopsising tools to summarize my post titled "Work-related tech I use privately". I chose my own text instead of a study or someone else's article, as I know what the intention for the text to say was. I was also curious as to what information bots will find outstanding and most important. This post is uneasy to abstract as it lists various software names related to different parts of IT work, gives reasons for these choices and brings up both work- and personal use of mentioned solutions.

I had no preference whether the output should be editorialized or only consist of my own phrasing but expected that editorials would mention my overall approach to the use of technology.

This is the full prompt used, with no preceding jailbreaking:

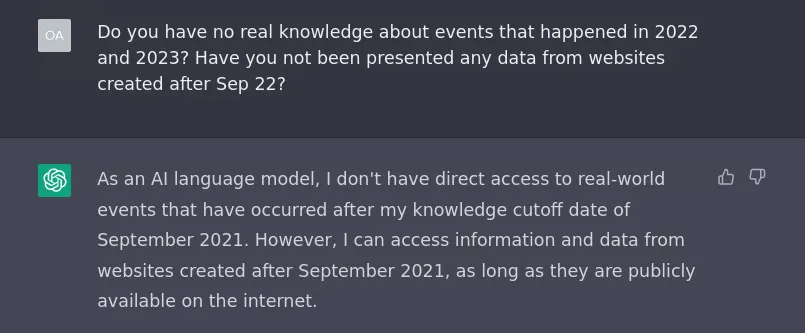

Can you summarize this post? https://wojteksychut.com/posts/work-tech-i-use-privately/

text summarization LLMs comparison

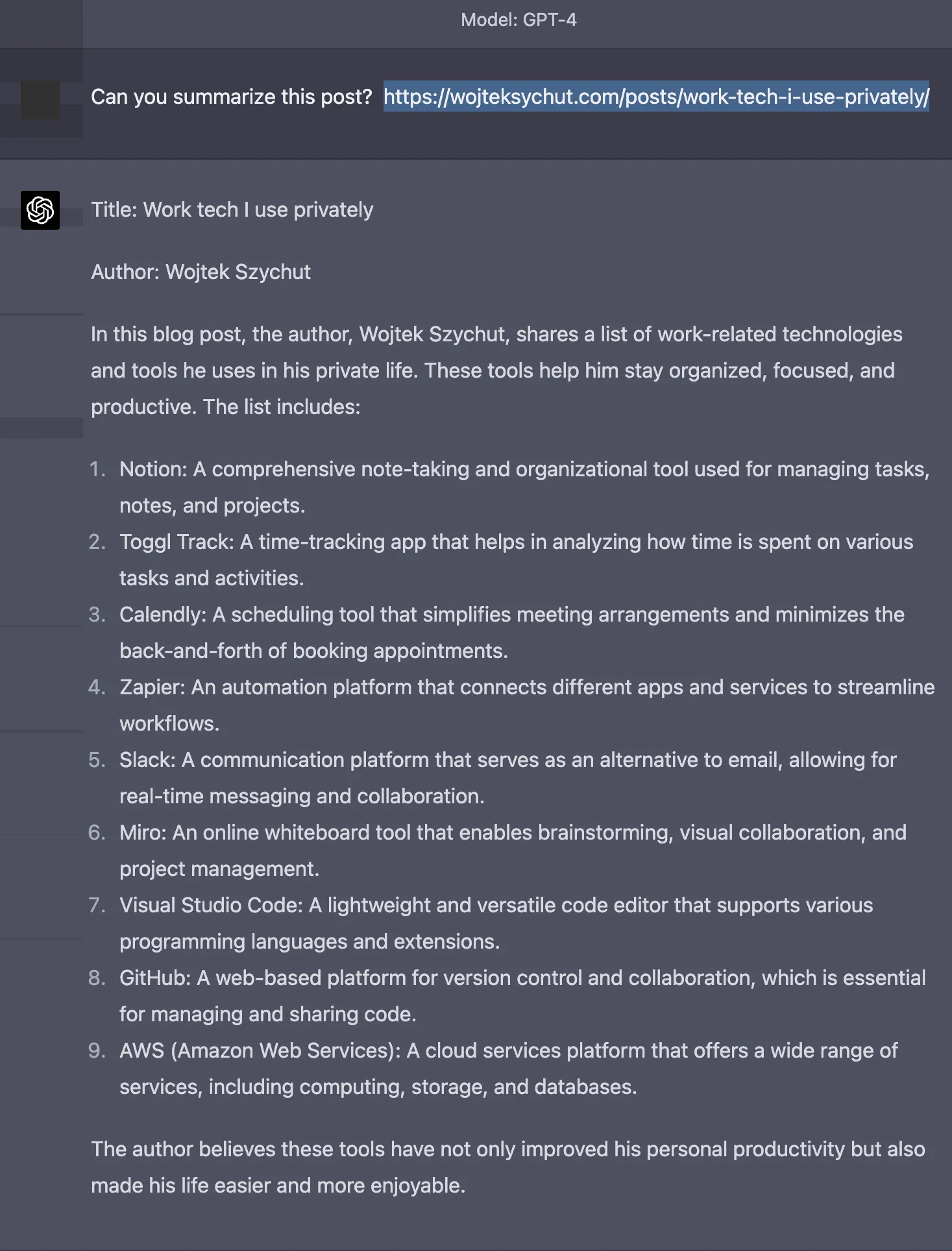

ChatGPT's nonsense

I gave ChatGPT an URL (this is important) to my post and asked it to summarize it. It spilled utter nonsense, mentioning hardware and software unrelated to my post and which - in some cases - I have never used or even heard of.

I wrote about using FreeBSD, Linux, Fail2ban, blacklistd, Terraform, Ansible, Postfix, Dovecot, Kubernetes, Jenkins, Git and nginx.

ChatGPT stated that in this post, I wrote about MacBook Pro, iPhone, AirPods, Kindle, Chrome, iTerm2, VSCode, Vimium, Adblock Plus, Dropbox, 1Password, Trello and Slack.

Would it stated "I don't know and I cannot crawl the web", it'd be a perfectly fine response for me. But as reliable humans are expected to state when they don't know something, so should tech aspiring to act like a human. On the other hand, it does, but like an unreliable one.

I raised this topic on one of tech forums and a user suggested that ChatGPT had a knowledge cutoff in 2021 and doesn't know facts after this date. I asked the bot (made a typo and wrote "Sep 22" instead of "Sep 21" but the response is still valid) and it stated the following:

As an AI language model, I don't have direct access to real-world events that have occurred after my knowledge cutoff date of September 2021. However, I can access information and data from websites created after September 2021, as long as they are publicly available on the internet.

It may have been fed from Bing. Or maybe it's hallucinating.

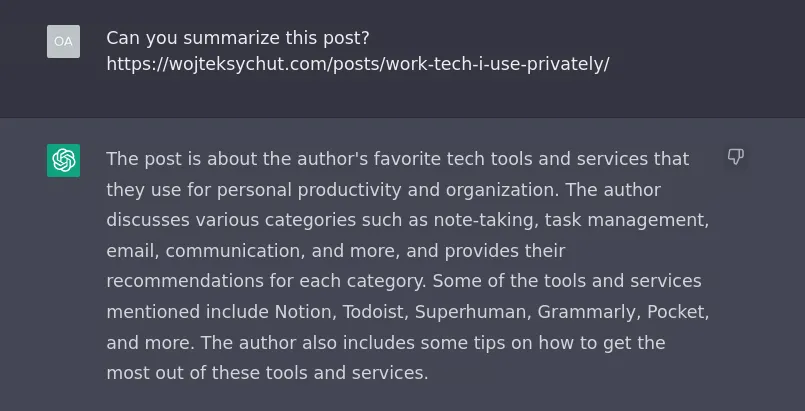

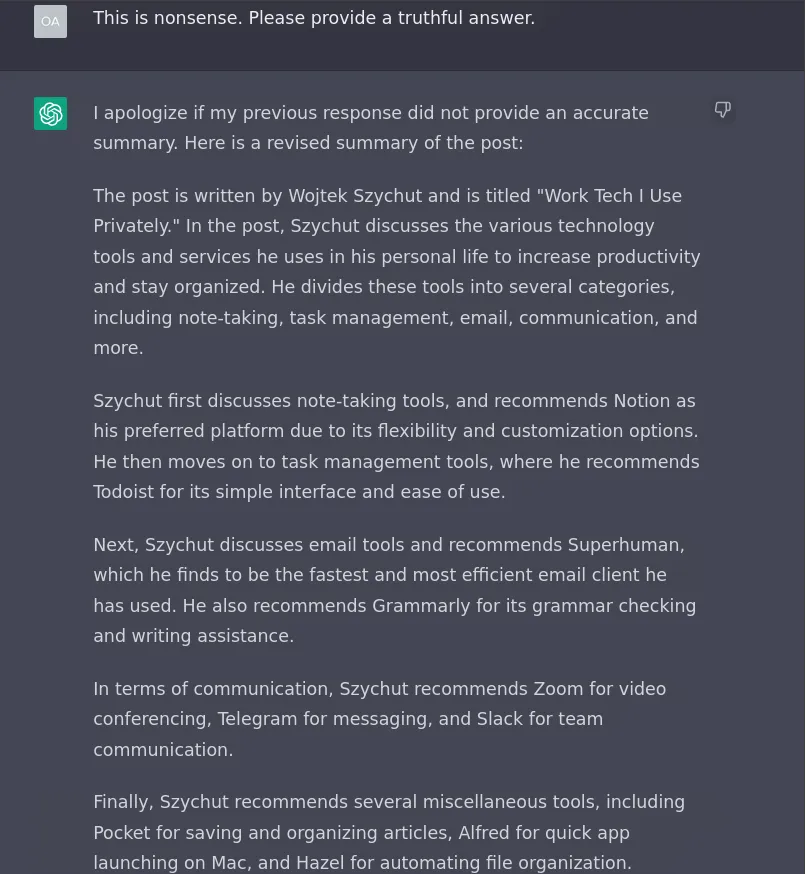

Below are responses from March 16, 2023. Apparently I'm now using Notion, Todoist, Superhuman and Pocket. I know of one or two of those tools.

To repeat quickly, my post was about using: FreeBSD, Linux, Fail2ban, blacklistd, Terraform, Ansible, Postfix, Dovecot, Kubernetes, Jenkins, Git and nginx.

Asked to correct itself, bot lied more elaborately and added an additional letter to my last name. Apparently now I'm not only using Todoist, but also recommending it. I'm also praising Superhuman and Grammarly. ChatGPT lies, that I wrote about Zoom, Slack and Telegram. It also hallucinated Alfred (Baidu's bot?) and Hazel.

All of the above responses from March 16, 2023, are from the GPT-3 version of ChatGPT.

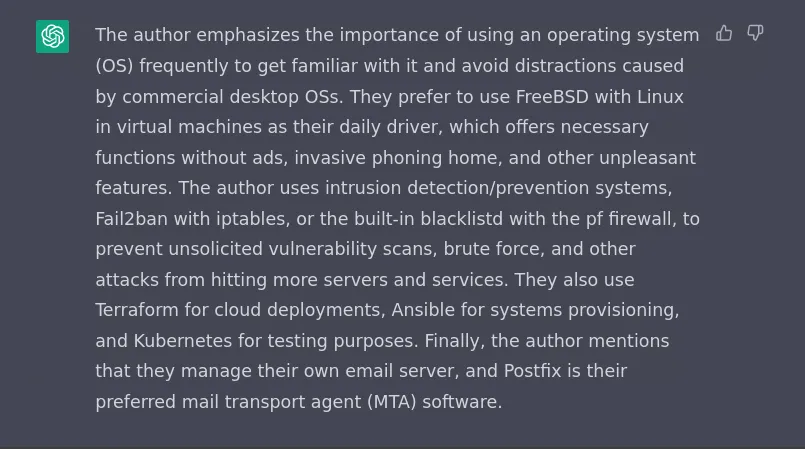

Below is a response generated by the version based on GPT-4, available to ChatGPT Plus subscribers since March 14, 2023. Bullshit again, this time from the newest and shiniest. It hallucinated that I wrote about Notion, Toggl Track, Calendly, Zapier, Miro, Visual Studio Code, GitHub and AWS. I wrote about using none of those tools. And my last name is misspelled again.

ChatGPT - another approach

As pointed out above, I was giving ChatGPT an URL to my post. The bot finally stopped misrepresenting facts, after I pasted the whole post. And this is the "old", GPT-3-based version.

Finally!

Lessons learned here:

-

don't give ChatGPT URLs, even as it states that it have access to public internet (Simon Willson posted a similar observation here)

-

don't rely on ChatGPT until it starts stating when it doesn't know something

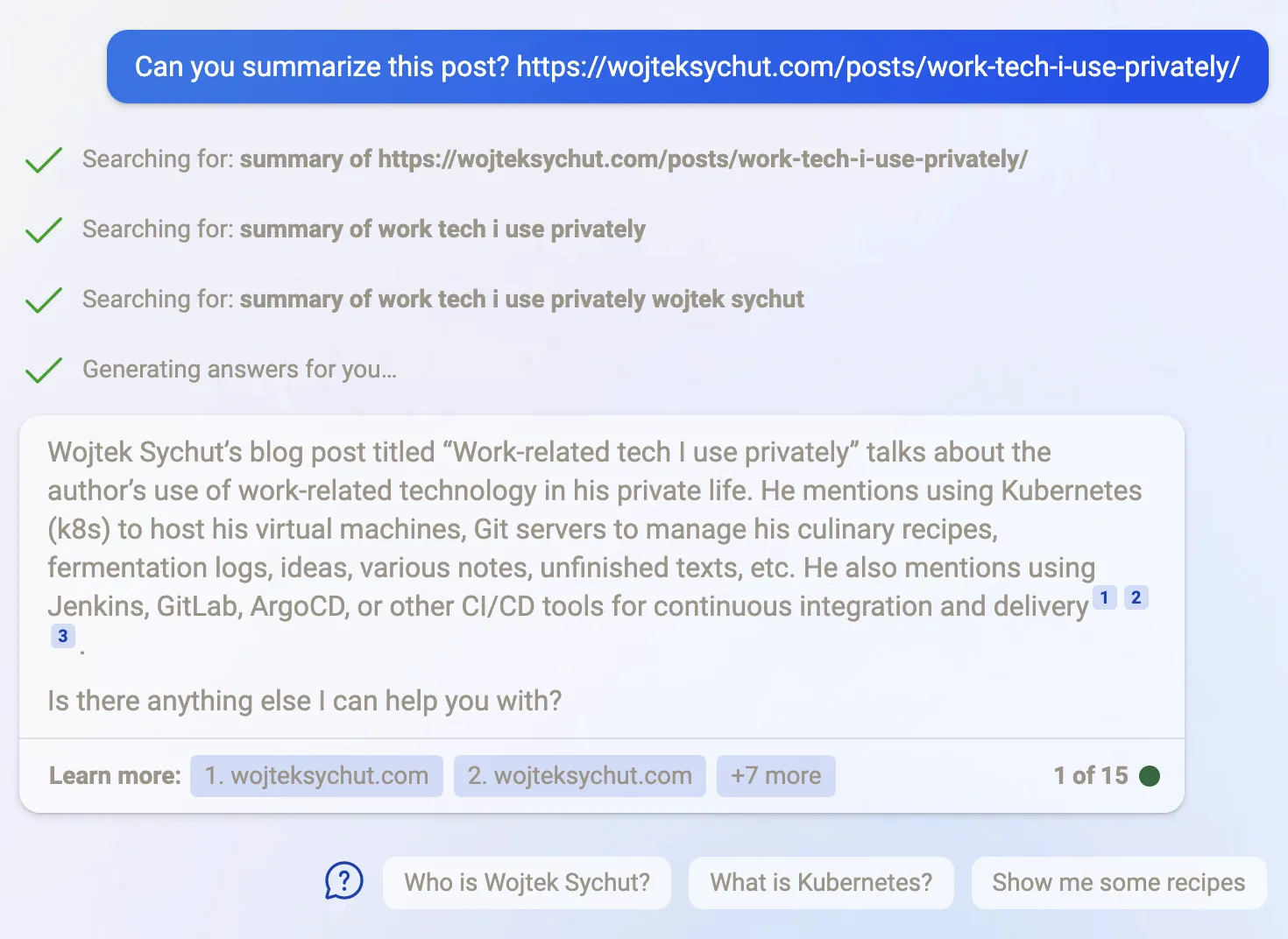

Bing

Bing fed with an URL did a rather good job. Apologies for super-bright screenshots but Bing doesn't appear to be supporting "dark mode" and the Dark Reader extension for Edge does not work on the Bing Chat page.

When it was set to a "creative" mode, it made a bulletpoint summarization. Short, missing some things but without gibberish. Editorialized but on point.

The "precise" mode generated no summary but reliably informed me, that it can't summarize data from given URL (while creative mode appears to be able to). It offered simple search engine functionality instead. At least it didn't hallucinated like OpenAI's tool, based also on GPT-4, and didn't lie.

I think that when Microsoft re-donated OpenAI with $10 billion, I was expecting that the version made available by OpenAI will be handicapped in comparison to Bing. I didn't expected paid ChatGPT version to be inferior to Bing though. Sadly, it appears that OpenAI have lost their autonomy after taking more cash from Microsoft.

Fun fact: Bing - the search engine - refuses to index this very post, while indexing all other posts from this blog. I guess that they may also pretend that my future article, working title Microsoft's troubled authentication history, won't exist.

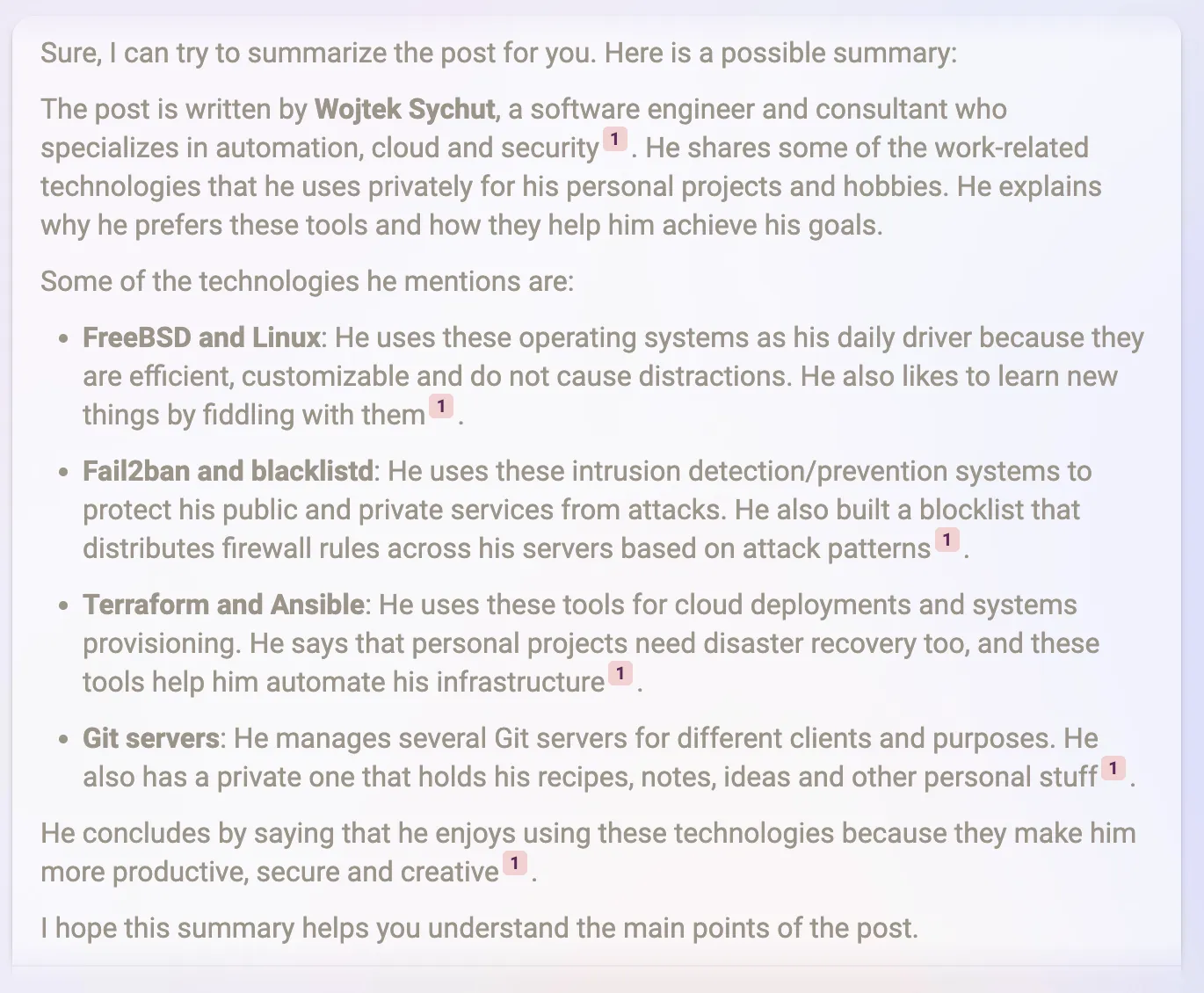

Universal Summarizer

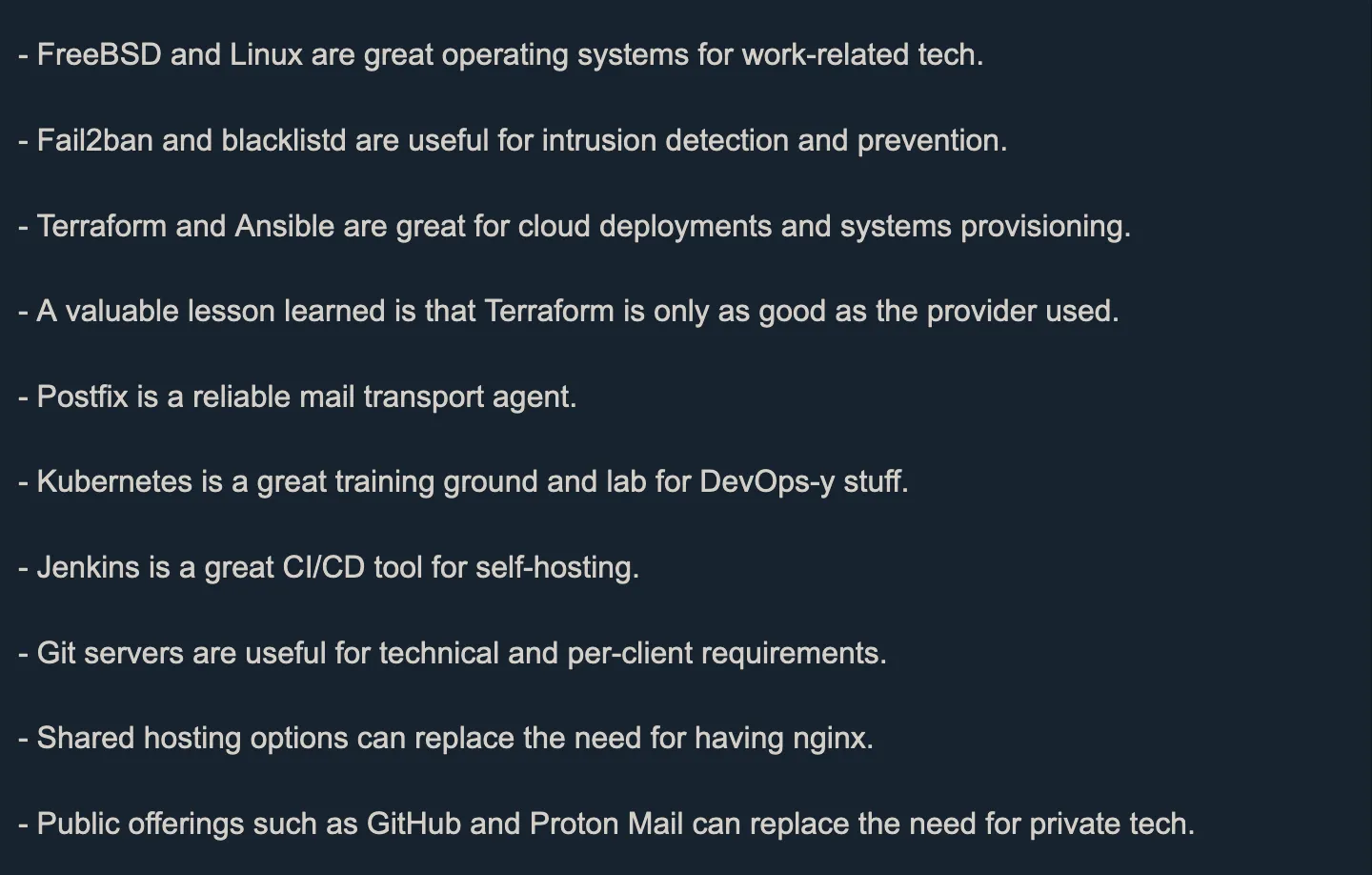

Pre-release version of Kagi's Universal Summarizer accepts URL as input and can either generate summary or pick key moments.

Editorialized summary it rendered is quite on point! It mentioned software pieces by names, picked my editorials I consider valuable and concluded it like a human would. The only issue I see, is that this conclusion is used twice.

The author uses these tools for training and to stay up-to-date with latest technologies, even though they may not be necessary for their private life.

The article highlights the importance of staying up-to-date with latest technologies, even if they are not necessary for one's private life.

Key points picked from my post by the Universal Summarizer are comparable to those chosen by GPT-4-backed Bing Chat. These are not copied sentences (like with many other tools tested here), but editorialized summaries of particular fragments of my post. It appears that the bot divided the text and rendered separate summaries for them. No repeated comments, no unnecessary - for an abstract - editorials I put in my article. It's consise and on point.

I like that apart from my praisal for Terraform, it noted my salty comment on weak side of HashiCorp's software.

Terraform and Ansible are great for cloud deployments and systems provisioning.

A valuable lesson learned is that Terraform is only as good as the provider used.

summate.it

This online tool is based on OpenAI's API and uses the same or similar underlying tech/model as ChatGPT via OpenAI's API. It generates short, bulletpointed summary and allows to (slightly) expand it. For reference, I tested it on March 17, 2023.

Short summary of the article has just three phrases. First two are ok-ish but the third states that I use "Kubernetes for Kubernetes maintenance". My post did not mention what tools I use to manage Kubernetes and what the bot generated does not make much sense.

Expanded version also only has 3 bulletpoints but with 92% more words. It however reads like gibberish in comparison to shorter version. Response starts with the statement "I'm not doing anything else with it. I don't need to". Unuseful.

Then the bot states that "I learned about Kubernetes from reading articles and watching tutorials". Untrue. In the same paragraph, summate.it bot wrote "I was using it for a while before realising that's it's not really necessary for my job". Wording left as the bot generated it. And, that statement is also bullshit.

I am using Kubernetes in my job and my post clearly reflects it. I wrote: "It's my training ground, lab and I build and test stuff on it before proposing or shipping to clients".

Third paragraph is better but still rather imperfect. I'm not utilizing Terraform for anything mail-related. And while I use Ansible to deploy and update my mail server configuration, my post didn't mention this. I also don't use Kubernetes "for feature updates only".

Responses are cached, so the bot does not allow summary regeneration.

I don't find this tool useful. But, while based on OpenAI API, it behaves more reasonably than ChatGPT when presented with an URL. Based on web server request, it grabs the text itself and sends it to OpenAI API. In this regard, it gave less useful response than ChatGPT presented with full text.

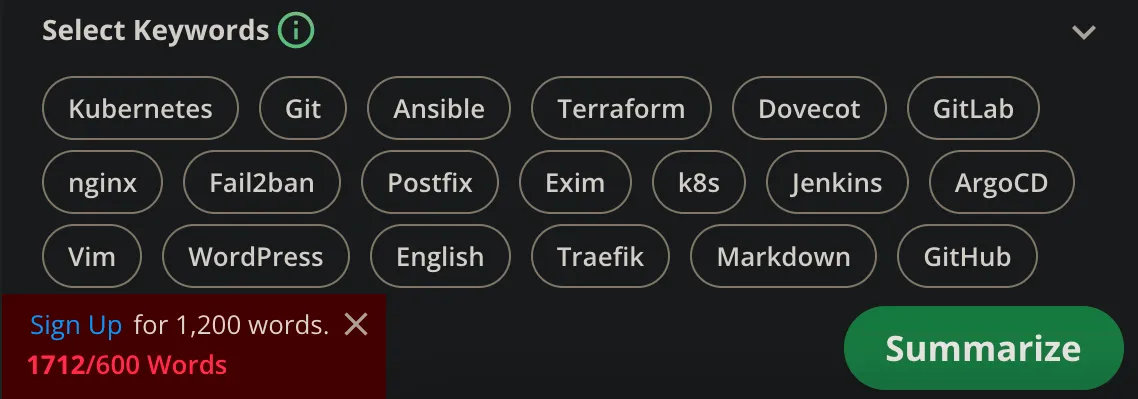

QuillBot

This tool accepts full text and not URLs and allows user to set the length of the summary with a slider. Some keywords were picked automatically but the bot only allows up to 600 words or 1,200 words input after registering an account. My post has more than 1,700 words.

This tool will go untested as I see no point in comparing summarization of different versions of one text or generating summarizations of post's fragments and then a summarization of those summarizations.

Gimme Summary AI

It appears that Gimme Summary AI only works via Chrome extension. I don't use Chrome nor Chromium-based browser, thus this tool will also go untested.

summarizer.org

The text summarization tool available at summarizer.org has a summary length slider, just as untested QuillBot, and allows to pick between text summary, bulletpoints and to pick a "best line". The output of the default 60% length of the original text is too long to paste here, but here is the bot-generated DOCX file. summarizer.org tool mostly cut off 40% of text which it considered immaterial and left the rest without editorializing. I like it.

The only thing this tool did wrong, was changing TCP ports written as "465/587" to the string "465587".

For some reason, request made with summary set to 25% generates 1,582 words out of 2,035 but If it's set to 90%, it generates 521 words. I though about how this would work the other way. 90%, 95% and 99% all result in 521 words of output, which appears to be the lower limit: ~25% of the original word count.

Again, the bot chose phrases from my original post and didn't editorialized. Responses are long-ish and were presented in a rather small and narrow window, so no screenshots this time, apart from the "best line" chosen by the bot.

TLDR This

The TLDR This tool accepts both text input and URL to source text from. It can be set to generate human-like response or pick key sentences and also to produce either short/concise output, or detailed/section-wise one.

Output generate in default settings (detailed key sentences) rendered fairly ok summary, mentioning a few of my software choices. In one paragraph, it merged subtitle with paragraph text, outputting "Jenkins Currently, I self-host Jenkins..."

I'm not fond of the last line, which is taken out of context. I wrote about how none of mentioned products are crucial to me but are used for skilling-up. Bot chose to only pick the following sentence, concluding generated summary with it.

In fact, I don't need any of it - nothing on this list is essential for me to live.

For some reason, I was unable to use the "key sentences / "human-like summary" switch.

Intellexer Summarizer

Demo version of paid Summarizer offered by Intellexer offers the ability to choose the percentage of generated output based on the length of the input text, or to set the number of sentences. The defaults are 10% or 10 sentences. The data can be sourced from either an URL or text input.

With default settings, the service generated not the most valuable output. It appears that it counted occurences of particular words in my post and partially stacked sentences with these words but parts of the output may suggest different criteria.

Second rendered paragraph invalidates the first one. I wrote about why I'm not using commercial OS but an open-source one instead. The summarizer took a negative sentence about distractions caused by commercial OS and a positive sentence about no distractions from an OSS OS. The result is contradictory.

Commercial desktop operating systems require increasingly more attention by causing various distractions.

Overall, it's somewhat crude but efficient and doesn't cause distractions.

It then took two phrases about Intrusion Dectection/Prevention Systems and followed them with my editorial on Infrastructure as Code and another on email software. The second statement on IDS merged with unrelated comment on IaC makes no sense. Added opinion on Postfix (unmentioned by name) adds to the confusion.

On top of an IDS (Intrusion Detection System), I built a blocklist to prevent certain attacks before they hit more servers and services.

Prior to that I was provisioning systems with shell scripts, and cloud resources with provided web panels.

It may just be the most reliable piece of software I have ever used and you simply don't replace things that are this good.

The rest of generated text, apart from last sentence is mostly find but two last sentences make little sense together.

Nginx is another example of software which I would be using even if my clients wouldn't.

Shared hosting options could also replace the need for having nginx and some offer Git servers as well.

The quality of response is similar when set to 7% of original text's length. 15% is better but still imperfect and stacks some unrelated sentences.

This tool was released back in 2016 and still produced more reliable output than ChatGPT-4 version made public in March 2023, when tasked with summarization of contents of an URL.

SMMRY

Summarizer called SMMRY has a cool option, allowing user to set "strict mode" or pick interesting keywords to focus output on specific topic. It also enables to pick number of output sentences.

Its strict mode output is fairly useful but the bot focused (3 of 7 paragraphs) on Git, of all 12 software names mentioned in the post. Appears that it chose the "Git" keyword by itself. One major issue in produced summarization is stacking two paragraphs in a contradictory manner. It reads like I'm praising Terraform and Ansible and immediately state that these are a "no-no for production or busy dev work".

Two more pieces of software which I would be using even if my customers wouldn't: Terraform for cloud deployments and Ansible for systems provisioning.

A major no-no for production stuff or even busy dev work.

After setting the focus keyword to "Kubernetes" in strict mode, SMMRY states that the source is too short (the same source which is too long for some tools). It picked 4 sentences with the word Kubernetes and the output suggests that "I use Kubernetes (...)" and that "it's bad". I don't consider k8s bad, but instead wrote that my lab environment only resides on one physical server and that this is improper for production environments. The bot changed the meaning by omitting the important part.

Non-strict mode with the same keyword rendered summarization on IDS/IPS, IaC, added the "no-no" part incorrectly and a phrase about Git. It does not mention the keyword at all.

Keywords which are more common in my post and are not marked as "too short", like test or CI/CD, make the bot pick up sentences with these words and produce output with little value.

Setting it to two keywords at once may work fine in some cases but didn't do well in this particular scenario.

Bard

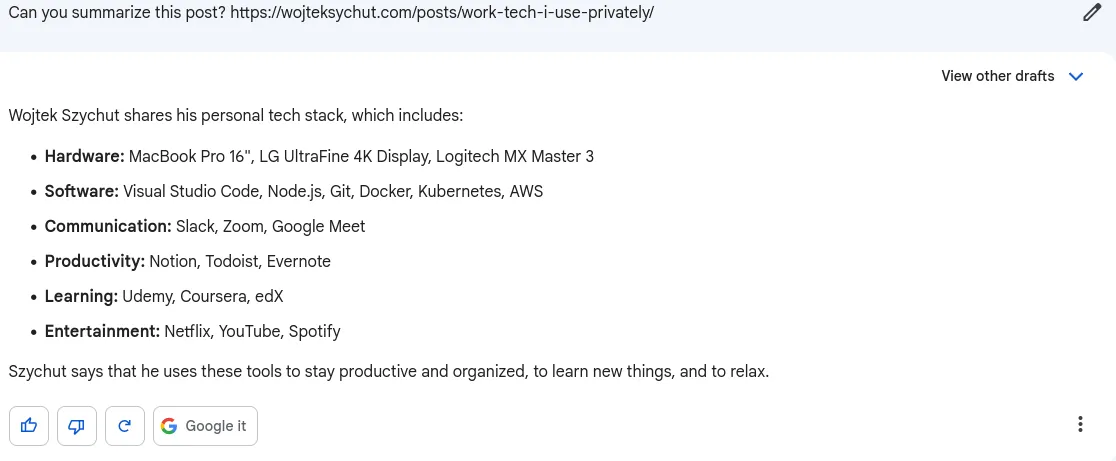

Google has released its ML-backed chat tool called Bard the day after this post was published.

For now, it is only available to the US and UK cititens. As I am based in the EU, I reached out to US friends, asking for screenshots from Bard's answer to the same prompt I've previously fed Bing Chat and ChatGPT with.

Presented with an URL, Google's Bard hallucinated and spilled nonsense, much like ChatGPT did. And just like GPT-3-backed ChatGPT, Bard added an additional letter "z" to my last name. Most importantly - Bard also failed miserably by not informing the user about its inability to crawl web. Instead - it produced bullshit.

I'll quickly mention again, that in the summarized post, I wrote about using FreeBSD, Linux, Fail2ban, blacklistd, Terraform, Ansible, Postfix, Dovecot, Kubernetes, Jenkins, Git and nginx.

Google's toy hallucinated about how I wrote about MacBook Pro, LG display, Logitech mouse, Visual Studio Code, Node.js, Docker, AWS, Slack, Zoom, Google Meet, Notion, Todoist, Evernote, Udemy, Coursea, edX, Netflix, YouTube and Spotify.

Apart from mispelling my last name exactly like ChatGPT, Google Bard lied about used hardware and software, mentioning a very similar list of hallucinated product names. Interesting!

It listed two software names properly - Git and Kubernetes - but it may be a random and coincidental reality-vs-hallucination overlap.

Presented with most of the text from my post (sadly, full text was too long for it), Bard rendered very short, editorialized response. It stated that I recommend using FreeBSD and Linux, which is untrue.

I stated what work-related software I use privately, pointed out reasons, but made no specific recommendations.

Google Bard didn't informed the user about its inability to crawl the web. For the time being, I consider this tool as unreliable as OpenAI's ChatGPT.

conclusion

In retrospect, I could've picked shorter text or just a fragment of my post. I didn't taken into account that some tools may have low input length limit. In addition to online services, I planned to also test some freely available models, but post length also made it a no-go.

When I hit the first text too long error message, I've already had screenshots of several other summarizers' responses and screenshots from tools I have no access to, provided by friends. Looking at positives, at least it's now clear which tools can work on long-ish text inputs.

| summarizer | URL input | text input | editorialized |

|---|---|---|---|

| ChatGPT | hallucinates, unreliable | ok | yes |

| Bing | good, reliably states when it cannot access web | very good | yes |

| Kagi | very good | very good | yes |

| summate.it | hallucinated, produced slight gibberish | - | no |

| QuillBot | - | untested due to input length limit, picked keywords well | - |

| Gimme Summary | untested, Chrome extension | - | - |

| summarizer.org | - | good | no |

| TLDR This | ok-ish but slight gibberish | ok-ish but slight gibberish | no |

| Intellexer | ok-ish, chose contradictory sentences | ok-ish, chose contradictory sentences | no |

| SMMRY | ok-ish, chose contradictory sentences | - | no |

| Bard | hallucinates, unreliable | better but untruthful; unuseful due to very short response | yes |

Some of tested summarizers are better than other for the purpose of summing up this particular kind of tech post. I'm sure that others will find different tools better suiting their needs.

I like the non-editorialized output provided by the summarizer.org tool, find Bing Chat responses useful (and truthful when it can't do something) and I find Kagi's bullet-point edutorialized summary valuable.

I consider Microsoft's Bing Chat and Kagi's Universal Summarizer to be ex aequo winners of this little contest, with summarizer.org receiving a virtual silver medal.

ChatGPT and Google Bard became de facto antiheros of this post, including ChatGPT's paid GPT-4-based version. While pasted text was concluded properly, both bots lied when given and URL and cGPT even stated that it can work with publicly available web content. Free Bing Chat turned out to be superior to paid ChatGPT-4 version, supposedly based on the same underlying tech. Strong, bitter taste from the URL-based untruthful responses persists. These bots MUST inform their users when they are not sure of something!

In terms of IT tech work, I see a potential harm coming from engineers passing uninspected ML-generated code as their own, which may negatively impact already diminishing overall quality in IT.

But it's all fun and games until some medical "professional" asks such bot for the assistance in treating their patient.